PyTorch provides two methods to check if a GPU is available. The function cuda.is_available() tells you if a GPU is there by giving a simple True or False answer. The second method, torch.device(‘cuda’), creates a special object for using the GPU.

This short guide will show you, step by step, how to check if a GPU is available in PyTorch.

What is PyTorch?

PyTorch is a well-known tool used to create and train machine learning models. It helps developers create AI applications like image and speech recognition. PyTorch is known for being easy to use and supports both CPUs and GPUs.

Checking if PyTorch is Using the GPU

To check if PyTorch is using the GPU, use torch.cuda.is_available() to see if a GPU is present. If a GPU is available, you can set your model to use it by using torch. device(‘cuda’) to use the GPU for faster performance.

Using PyTorch with the GPU

To use PyTorch with a GPU, first, check if a GPU is available using a torch.cuda.is_available(). If it is, set your model and data to torch. device(‘cuda’). This helps speed up training and processing.

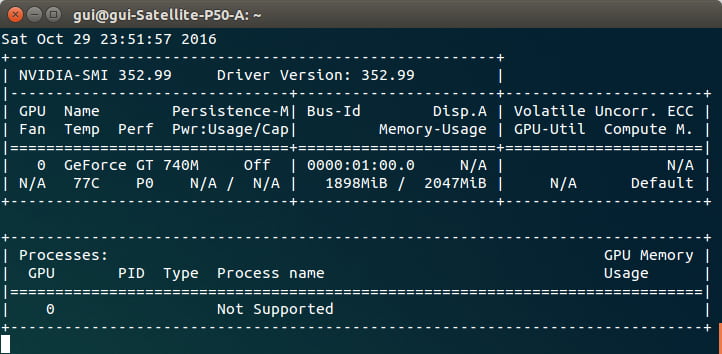

Read Also: How Many Degrees Is Overheating GPU – Act Fast!

How to Check for CUDA GPU Availability?

To check for CUDA GPU availability in PyTorch, use a torch.cuda.is_available(). If it shows True, a CUDA GPU is available. If it shows False, only the CPU can be used for processing.

Checking GPU Availability – Information 2024!

To check if a GPU is available in PyTorch, use torch.cuda.is_available(). If it returns True, a GPU is available for use. This helps confirm if your system can run tasks faster using the GPU instead of the CPU.

Install CUDA:

To install CUDA, go to the NVIDIA CUDA Toolkit website and choose the correct version for your operating system. Follow the instructions for installation. Make sure your GPU and drivers are compatible with the selected CUDA version.

Install CuDNN:

To install cuDNN, download it from the NVIDIA cuDNN website. Choose the version that matches your CUDA version. Extract the files, then copy them into your CUDA folder.

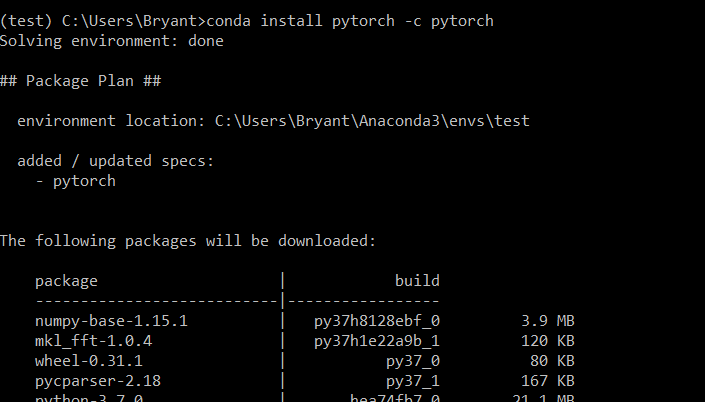

Install PyTorch:

To install PyTorch, visit the PyTorch website. Choose your operating system, package manager, and version. Copy the given command and run it in your terminal or command prompt to complete the installation.

Check Availability:

To check availability in PyTorch, use torch.cuda.is_available(). If it returns True, a GPU is available for use. This command helps confirm whether your system can use GPU resources or will rely on the CPU.

Specify Device:

To specify a device in PyTorch, use torch.device(‘cuda’) for the GPU or torch.device(‘cpu’) for the CPU. Then, move your model and data to this device using .to(device) to ensure proper processing on the selected hardware.

Read Also: GPU And CPU Are Fine But Games Are Crashing – Updates 2024!

Setting the Device in PyTorch Code

To set the device in PyTorch, use device = torch.device(‘cuda’ if torch.cuda.is_available() else ‘cpu’). This code automatically chooses the GPU if available; otherwise, it selects the CPU. Use .to(device) to send your model and data to the chosen device.

Specifying the Device:

Specifying the device in PyTorch means choosing between CPU and GPU for processing. Use torch.device(‘cuda’) for GPU and torch.device(‘cpu’) for CPU. Use .to(device) to transfer your model and data to the chosen device for better performance.

Sending Tensors to the Device:

Sending tensors to a device in PyTorch means moving data to the CPU or GPU. Use .to(device) to transfer tensors. For example, if device = torch.device(‘cuda’), use tensor = tensor.to(device) to send it to the GPU.

Moving Model and Data to GPU

Choosing a GPU:

To move your model and data to a GPU in PyTorch, use .to(‘cuda’). For choosing a specific GPU, use torch.device(‘cuda:0’), where 0 is the GPU index. This ensures faster training and processing on the selected GPU.

Moving Tensors to GPU:

To move tensors to a GPU in PyTorch, use tensor.to(‘cuda’). This command transfers the tensor from the CPU to the GPU for faster calculations. Make sure the GPU is available by checking with torch.cuda.is_available() before moving.

Moving Models to GPU:

To move your models to a GPU in PyTorch, use model.to(‘cuda’). This shifts the model from the CPU to the GPU, making training faster. First, check if a GPU is available by using torch.cuda.is_available().

Moving DataLoaders to GPU:

You cannot move DataLoaders directly to a GPU in PyTorch. Instead, send each tensor to the GPU while training. Use the DataLoader to load data, and then send each batch to the GPU using .to(‘cuda’) while training your model.

Read Also: Is It Bad To Stress Test Your GPU – Protect your GPU!

Using PyTorch with the GPU

Using PyTorch with a GPU speeds up training and processing. First, see if a GPU is available by using torch.cuda.is_available(). Then, move your model and data to the GPU using .to(‘cuda’) for faster performance during computations.

Common Errors and How to Handle Them

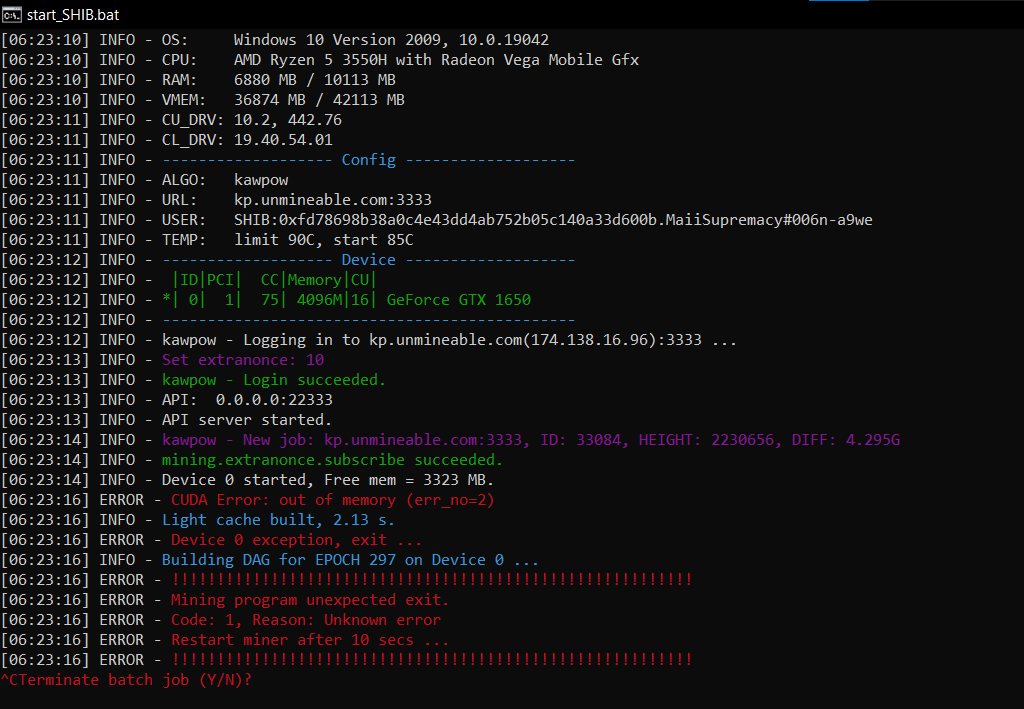

Error 1: CUDA Error Error Message:

A CUDA error message in PyTorch means there is a problem with GPU processing. Common issues include out-of-memory errors or incompatible CUDA versions. To fix it, check your GPU memory, update drivers, and ensure your code is correct for GPU use.

Error 2: Incorrect CUDA Version Error Message:

These errors message means that the installed CUDA version does not match the version required by your PyTorch installation. To fix this, check the PyTorch website for the correct version and install it to avoid compatibility issues.

Error 3: GPU Memory Issues Error Message:

This error message occurs when your program tries to use more memory than the GPU can provide. To fix this, reduce the batch size in your code, close other programs using the GPU, or upgrade your GPU.

Frequently Asked Questions:

1. How do I check if a GPU is available in PyTorch?

To find out if a GPU is available in PyTorch, use the command torch.cuda.is_available(). If it returns True, a GPU is ready for use. If it returns False, only the CPU is available for running tasks.

2. Why do I need to check if a GPU is available?

You need to check if a GPU is available to ensure your program runs faster. GPUs can handle many calculations at once, speeding up tasks like training machine learning models. If no GPU is available, the CPU will be used instead.

3. What if I get an error saying CUDA out of memory?

If you see a CUDA out of memory error, it means your GPU doesn’t have enough memory for the task. To fix this, reduce the batch size, close other programs using the GPU, or consider upgrading to a GPU with more memory.

4. Are there any online resources for PyTorch troubleshooting and error messages?

Yes, there are many online resources for PyTorch troubleshooting. The official PyTorch documentation is helpful. You can also find answers on forums like Stack Overflow and PyTorch Discuss for common error messages and solutions.

5. Can I automatically check for GPU availability in my PyTorch code?

Yes, you can automatically check for GPU availability in your PyTorch code. Use this line: device = torch.device(‘cuda’ if torch.cuda.is_available() else ‘cpu’). This code sets the device to GPU if available; otherwise, it uses the CPU.

Conclusion

In conclusion, in PyTorch, checking GPU availability is essential for optimizing performance. Use “torch.cuda.is_available()” to see if a GPU is present and set your model to use it for faster training and processing.