GPUs can face cache coherence issues because they often have multiple memory caches working in parallel. All caches are synchronized when accessing shared data is challenging, especially in systems with both CPU and GPU.

This article will explore how GPUs can face cache coherence problems when multiple processors access shared memory, making data updates harder to sync. If not managed properly, this can lead to slower performance.

Cache Coherency and Shared Virtual Memory

Cache coherency ensures that all processors, like the CPU and GPU, have the most updated data when they access shared memory. Shared Virtual Memory allows both CPU and GPU to use the same memory space.

If cache coherency isn’t managed well, data conflicts can happen, which may cause performance issues or errors in processing tasks.

What is Shared Virtual Memory?

The shared Virtual Memory allows the CPU and GPU to use the same memory space. This means they can easily share data without copying it between their separate memories.

SVM helps improve performance and simplify programming because both the CPU and GPU can access and work with the same data simultaneously.

So What is Cache Coherency?

This makes sure that when different coherency processors use shared data, they all have the latest version. If one processor changes the data, the others need to know right away.

Without cache coherency, they might work with old information, causing errors or slowing down performance in a system.

Per Core Instruction Cache

A per-core instruction caches a small, fast memory located inside each processor core. It stores instructions the core needs to execute tasks. By keeping frequently used instructions close, it speeds up processing.

Each core has its instruction cache, so it doesn’t need to wait for data from the main memory, improving performance.

Read Also: GPU drivers crashing – Resolve Crashes 2024!

Per Core Data Cache

This is a small memory inside each processor core that stores frequently used data. Each core has its own data cache to quickly access the information it needs without waiting for it to come from the main memory.

Device Wide Cache

A device-wide cache is a memory shared by all processor cores or parts of a device, like a CPU or GPU. Instead of being dedicated to one core, this cache is accessible to the entire device.

It helps store data and instructions that all cores might need, improving overall performance by reducing the time it takes to fetch information.

Challenges with Software Coherency

Software coherency faces challenges because it relies on the software to manage and update shared data between processors, like the CPU and GPU.

This can be slow and complex, as the software must ensure all parts have the correct data. Mistakes in this process can lead to performance problems, data errors, or inefficient resource usage.

Complexity of Software Coherency

The complexity of software coherency comes from the need to keep data synchronized across multiple processors. As more cores work together, managing shared data becomes harder.

Developers must create software that correctly handles data updates and ensures all processors see the same information. This adds extra work and can lead to mistakes if not done carefully, affecting performance.

Cache coherence for GPU architectures

Cache coherence in GPU architectures keeps data consistent across multiple cores, so each core accesses the latest version of shared data.

While CPUs enforce strict cache coherence, GPUs prioritize speed and efficiency, often using relaxed coherence models. This approach allows GPUs to handle massive parallel tasks faster but may limit certain data-sharing scenarios.

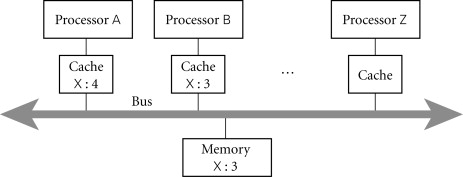

Hardware Coherency Requires an Advanced Bus Protocol

Hardware coherency needs an advanced bus protocol to help different processors communicate effectively. This protocol ensures that when one processor changes data, all other processors are updated quickly.

A good bus protocol helps reduce delays and keeps data consistent across the system. Without it, managing shared data can be slow and lead to errors in processing.

Read Also: What Is GPU Process In Chrome – Optimize Performance!

Adding Hardware Coherency to the GPU

This means improving how it shares data with the CPU and other processors. This helps make sure that when data changes, all parts of the system are updated quickly.

By implementing hardware coherency, GPUs can work more efficiently and reduce errors, leading to better performance in tasks like gaming and complex calculations.

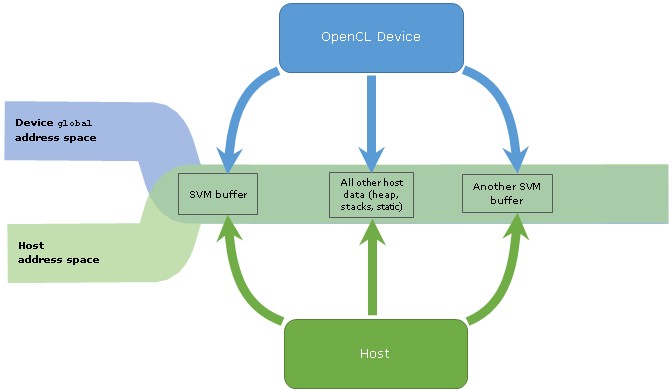

The Powerful Combination of SVM and Hardware Coherency

The powerful combination of Shared Virtual Memory and hardware coherency allows the CPU and GPU to work together smoothly. SVM enables them to share the same memory space, while hardware coherency keeps data updated across both.

This combination improves performance, reduces delays, and makes programming easier, as both processors can access the latest information without confusion or errors.

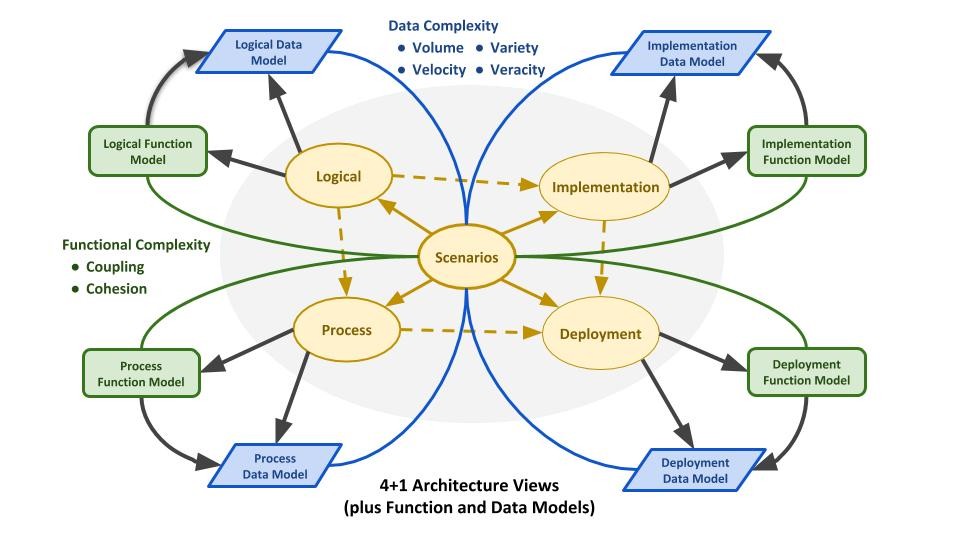

Connecting Hardware with Software: Compute APIs

This hardware connecting with software is done through Compute APIs (Application Programming Interfaces). These APIs help software communicate with hardware like GPUs and CPUs, allowing programmers to use their power easily.

They provide functions and commands to perform tasks like calculations and data processing. Using Compute APIs makes it simpler to develop software that can fully utilize the hardware’s capabilities.

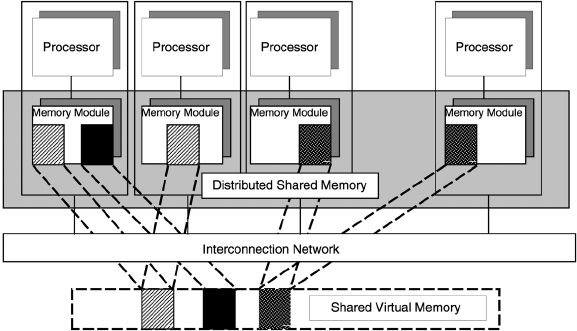

Hardware Requirements for Cache Coherency and Shared Virtual Memory

These include multiple processors, like CPUs and GPUs, that can share memory effectively. The system must have a strong memory controller and advanced bus protocols to keep data synchronized.

Fast memory is also important to reduce delays. These features help ensure that all processors work together smoothly and efficiently.

Read Also: What GPU Offloads LM Studio – Upgrade Your Hardware!

Cache Coherency Brings Heterogeneous Compute One Step Closer

Cache coherency brings heterogeneous computing closer by allowing different types of processors, like CPUs and GPUs, to work together more effectively. By ensuring that all processors have the latest data, it reduces confusion and improves performance.

This means tasks can be completed faster and more efficiently, making it easier to use a mix of hardware in computing systems.

Frequently Asked Questions:

1. Why can GPUs employ only small caches?

GPUs use small caches because they focus on processing many tasks at once, needing fast access to data. Larger caches would slow down performance and use more power. Small caches help GPUs run efficiently while handling multiple calculations quickly.

2. Is the GPU cache coherent?

GPU cache is not always coherent. This means that when one part of the GPU updates data, other parts might not see the change right away. Managing this inconsistency can slow down performance and lead to errors in calculations.

3. Does GPU have its cache?

Yes, a GPU has its cache. This cache stores frequently used data and instructions, allowing the GPU to access them quickly. Having its cache helps the GPU process tasks faster and improves overall performance in graphics and calculations.

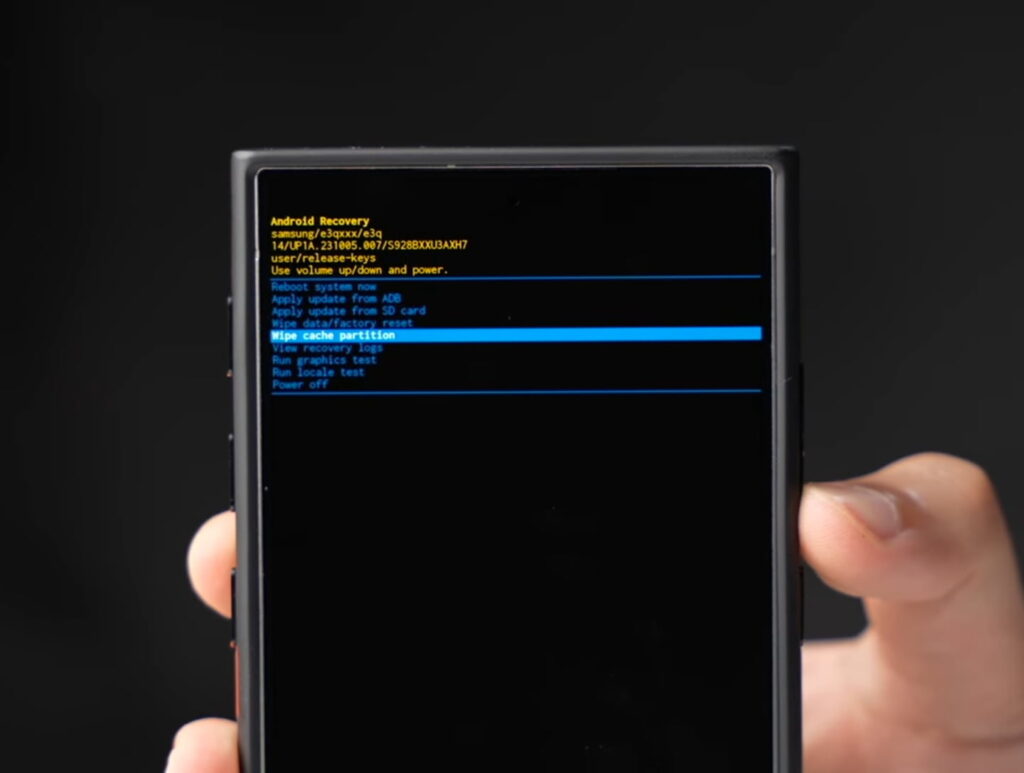

4. How to clear the cache on a GPU?

To clear the cache on a GPU, you can use software tools like GPU utilities or settings in your graphics driver. You can also restart your computer, which may help clear temporary cache data and improve performance.

5. Do GPUs have an L1 cache?

Yes, GPUs have an L1 cache. This small, fast memory is located close to the GPU cores and stores frequently used data. The L1 cache helps the GPU access information quickly, improving speed and efficiency in handling tasks.

Conclusion

In conclusion, cache coherency is important for efficient GPU and CPU collaboration, ensuring data consistency across processors. Shared Virtual Memory and hardware coherency improve performance by allowing faster data access.