Accelerate not fully using GPU memory, it usually results from settings like small batch sizes, limited compatibility, or software constraints. Optimizing configurations such as increasing batch sizes, using mixed precision, or updating GPU drivers can boost memory usage.

This article will explore how to accelerate might not use all GPU memory due to settings like small data batches or compatibility limits then tweaking settings and keeping drivers updated that can help boost performance.

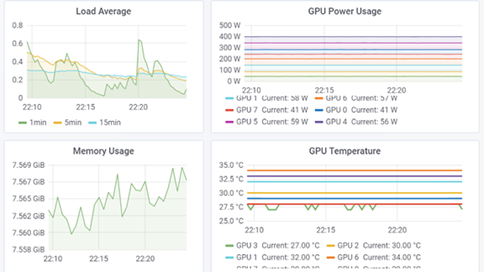

Metrics for evaluating GPU performance

Metrics for evaluating GPU performance include speed, power use, and memory capacity. Key factors are clock speed, memory bandwidth, and power efficiency.

Benchmark tests also help compare GPUs in real-world tasks, like gaming or AI, showing how well they handle different workloads.

Utilization:

GPU utilization indicates the percentage of the GPU’s processing power being actively used during tasks. Higher utilization means the GPU is working hard, often seen in intense tasks like gaming or machine learning.

Low utilization may mean the GPU is underused, which could be due to software limits, small batch sizes, or light workloads.

Memory:

It’s also called VRAM and stores data like textures, images, and model information needed for tasks like gaming or AI processing. Higher memory allows a GPU to handle larger files and more complex graphics smoothly.

If the GPU memory is low, tasks may slow down or cause issues, especially with high-resolution games or heavy computational tasks.

Power and temperature:

GPU power and temperature are key performance indicators. Power shows how much energy the GPU needs to run; high power often means stronger performance but uses more electricity.

Temperature tells how hot the GPU gets during tasks. High temperatures can reduce GPU life, so cooling systems help keep temperatures safe, especially during heavy tasks like gaming or rendering.

GPU performance-optimization techniques

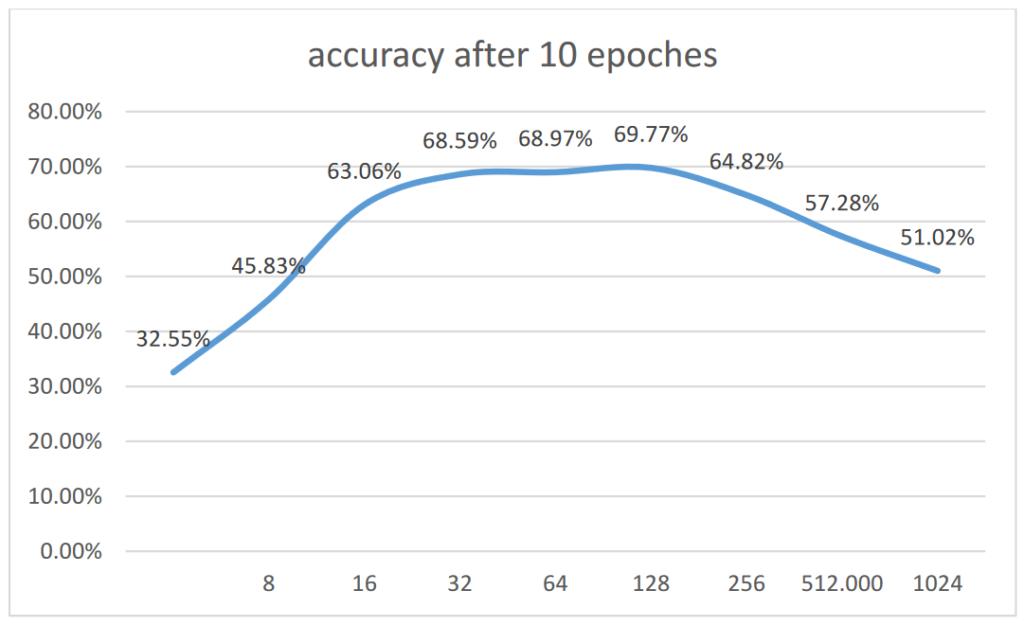

Increase the batch size to increase GPU utilization:

Increasing the batch size can help improve GPU utilization. A larger batch size means the GPU processes more data at once, keeping it busy and making better use of its power.

This leads to faster training times in tasks like machine learning. However, finding the right balance is important, as too large a batch size can cause memory issues.

Use mixed-precision training to maximize GPU performance:

helps maximize GPU performance. This technique combines different types of numbers, using lower precision (like 16-bit) for some calculations and higher precision (like 32-bit) for others. T

his method reduces memory use and speeds up processing while maintaining accuracy. Using mixed precision, you can train models faster and use the GPU’s resources better.

Read Also: Increase Data Transfer Speed From CPU To GPU – Explore Us!

Optimize the data pipeline to increase GPU utilization

Optimizing data loading:

This is important for better performance. Faster data loading means the GPU spends more time working and less time waiting.

You can improve loading speed by using efficient data formats, preloading data into memory, and using multiple workers to load data in parallel. Properly organizing your data and using caching can also help keep the GPU busy and increase overall efficiency.

Optimizing data transfer between CPU and GPU:

Optimizing data transfer between the CPU and GPU is crucial for better performance. Faster transfers mean the GPU can start working sooner. You can improve this by using faster data formats, minimizing data size, and keeping data close to the GPU in memory.

Using techniques like asynchronous transfer allows the CPU and GPU to work together more effectively, reducing wait times.

Optimizing data preprocessing:

Optimizing GPU data preprocessing helps improve performance in tasks like training models. This involves cleaning and preparing data faster before it reaches the GPU.

You can speed up preprocessing by using efficient libraries, reducing data size, and performing operations in parallel. Properly organizing your data and using caching can also help, ensuring the GPU gets data quickly and can work more efficiently.

Read Also: Steam Deck GPU CPU Display – Check Performance Now!

What Is GPU Utilization?

GPU utilization measures how much of the GPU’s power is being used during tasks. It is shown as a percentage, where 100% means the GPU is fully working.

High utilization means the GPU is busy and doing its job well, especially in gaming or data processing. Low utilization can indicate that the GPU is underused or waiting for more data.

Why Is Monitoring GPU Utilization Important?

It is important to ensure the GPU is working efficiently. It helps identify performance issues, prevents overheating, and ensures tasks run smoothly without wasting resources.

Improved Resource Allocation:

It means using available computing power and memory more effectively. By carefully distributing tasks between the CPU and GPU, you can ensure that both work efficiently.

This leads to faster processing, better performance in tasks like gaming or machine learning, and less wasted energy. Overall, smart resource allocation helps make the most of your hardware and enhances user experience.

Refining Performance:

Refining performance involves making changes to improve how well a system works. This can include adjusting settings, optimizing software, or upgrading hardware.

By refining performance, you can achieve faster speeds, smoother operation, and better efficiency in tasks like gaming or data processing. Regular checks and updates help maintain high performance, ensuring that the system runs at its best over time.

Saving Costs in Cloud Environments:

Saving costs in cloud environments means using resources wisely to reduce expenses. You can save money by choosing the right plan, shutting down unused services, and optimizing storage. Monitoring usage helps you identify wasteful spending.

Additionally, using auto-scaling can adjust resources based on demand, ensuring you only pay for what you need while keeping performance high.

Preventing Bottlenecks and Enhancing Workflows:

This means making processes smoother and faster. Bottlenecks occur when one part of a system slows down the whole operation. To prevent this, identify slow points and improve them, like upgrading hardware or optimizing tasks.

Streamlining workflows by using better tools and clear communication also helps teams work more efficiently, leading to better productivity and results.

Read Also: Real-Time Parallel Hashing On The GPU – Learn more here!

Reasons for Low GPU Utilization

CPU bottleneck:

A CPU bottleneck happens when the CPU cannot keep up with the GPUs processing power, leading to low GPU utilization.

This means the GPU is ready to work, but the CPU is too slow to send it data quickly enough. As a result, the GPU sits idle and does not perform at its full potential.

Memory bottleneck:

A memory bottleneck occurs when the GPU does not have enough memory or bandwidth to handle data quickly, leading to low GPU utilization.

This means that while the GPU is ready to process tasks, it waits for data to be loaded from slower memory sources. To fix this, you can upgrade to a GPU with more memory or optimize your data management and loading processes.

Inefficient parallelization:

When tasks are not divided properly for the GPU to process. This leads to low GPU utilization because the GPU sits idle, waiting for more work. When tasks are not optimized for parallel processing, the GPU cannot use its full power.

To improve this, ensure that tasks are well-distributed and designed to take advantage of the GPU’s ability to handle many operations at once.

Low compute intensity:

It means that the tasks being processed do not require much power from the GPU, leading to low GPU utilization. When tasks are simple or lightweight, the GPU doesn’t need to work hard, resulting in idle time.

To improve utilization, focus on more complex tasks that can fully leverage the GPU’s capabilities, such as advanced calculations or high-resolution graphics processing.

Use of single precision vs. double precision:

Using single precision (32-bit) instead of double precision (64-bit) can lead to low GPU utilization when tasks require high accuracy.

Single precision uses less memory and is faster, but for complex calculations, it might not be sufficient, causing the GPU to underperform.

Synchronization and blocking operations:

This can lead to low GPU utilization by making the GPU wait for the CPU or other tasks to finish. When one part of a process is blocked, the GPU cannot continue working, resulting in idle time.

To improve utilization, reduce blocking operations and use asynchronous methods, allowing the GPU to keep processing while waiting for other tasks to complete.

Monitoring and Improving GPU Utilization for Deep Learning

Monitoring GPU utilization for deep learning helps ensure the GPU is working efficiently. Use tools like nvidia-smi to track performance and identify low usage.

To improve utilization, adjust batch sizes, optimize data loading, and implement mixed-precision training. Regularly checking and tuning these factors can lead to faster training times and better model performance, making the most of your GPU resources.

Strategy for optimizing GPU usage

To optimize GPU usage, increase batch sizes to keep the GPU busy and use mixed precision to speed up processing while saving memory. Optimize data loading to reduce waiting times and identify bottlenecks that slow down performance.

Regularly monitor GPU utilization and implement asynchronous operations to allow the GPU to work while waiting for other tasks, minimizing idle time and enhancing efficiency.

Frequently Asked Questions:

1. Why is my GPU not using enough memory?

Your GPU may not use enough memory if the game or program is not demanding, or if the settings are low. It can also happen if there is not enough video memory needed for tasks like rendering or graphics processing.

2. How to fix your GPU memory?

To fix GPU memory issues, try closing unused programs to free up memory, update your GPU drivers, and lower graphics settings in games. If problems continue, check for hardware issues or consider upgrading your GPU for better performance.

3. Does GPU memory affect speed?

Yes, GPU memory affects speed. If the memory is low, the GPU may slow down because it cannot store enough data. More memory allows the GPU to handle bigger tasks faster, improving performance in games and applications.

4. How to fully utilize GPU memory?

To fully utilize GPU memory, use high-resolution settings in games, run demanding applications, and keep your GPU drivers updated. Also, close other programs that use memory and consider using software to optimize performance for better results.

5. Is there a way to force Accelerate to use all available GPU memory?

Yes, you can force Accelerate to use all available GPU memory by adjusting settings in your software or application. Check for options that allow memory allocation, and ensure your GPU drivers are updated to maximize performance and memory usage.

Conclusion

In conclusion, To ensure Accelerate fully utilizes GPU memory, optimize your settings by increasing batch sizes and using mixed precision. Regularly updating drivers and monitoring performance can enhance GPU efficiency.